At the time of writing this post, my home country, Finland, is widely shocked by successful attacks against a private psychiatric center Vastaamo from which a black hat hacker(s) obtained thousands of sensitive patient records by gaining access to an unprotected database server and network infrastructure. And the data is leaked in Tor network in steps, bitcoin payments are demanded, threatening emails are being sent to victims who panic.

Table of Contents

Foreword

The attack against the private psychiatric center is a sad event, but not actually any suprise to me. Overall, this is all about people and their sensitive, highly valuable data. About security, what we practice and learn in penetration testing, how to get into weakly protected systems and cause extremely serious damage. In this case it was so serious even Finland state leadership and president Sauli Niinistö has responded to it.

Real price of security ignorance

When…

…other priorities are more important in a project or organizational schedule

…security is not truly or actually understood

…security is too hard or too much effort

…security is too expensive or does not gain any direct profit to a company

…security requires too advanced knowledge

…security is seen as worthless effort

…security scenarios and risks are ignored or underestimated

…proper auditing or security systems are non-functional or non-existent

…critical data is or can be leaked via websites or any other end points

…unauthorized or uncontrolled personnel gain access to or learn details about critical environments

…digitalization trend! Features, apps and new sexy stuff are way higher priority than some boring security (because direct value of it can't be seen until it's too late to prevent damage)

Well, this is what happens. Especially when the target is a high value one, like a company holding database of sensitive patient records. It is much easier to take proper preventive security approach than deal with consequences when an attacker has already gained a permanent or temporary access to critical computers.

Follow-up consequences

As a consequence of publicly reported patient record leak, thousands of people are psychologically suffering, reputation of the whole psychiatric center is gone and the whole company can likely go bankrupt. Not only that, but organizing proper attack recovery work requires a major effort by state authorities, volunteers (like white hat hackers and psychologists), changes to law and whatnot. I may ask: was ignoring security aspects worth this all, just for gaining more short-term profit, better quarterly result, happy project stakeholders or what?

What can be learned from public media, proper security validation and auditing was not required from the penetrated server system, as it was classified as lower B-level system. What's also bad, at least chief executives of the company attempted to hide a security breach event from general public, direct stakeholders and major shareholders for over a year and half.

Welcoming black hats

Basically, the company was welcoming black hats into their systems: target was easy to penetrate and contained valuable data. From what I have read is that access to critical data was gained with combination of guessing root:root credentials and loopholes regarding some critical, publicly obtainable data. Closing these two giant holes in a server infrastructure & internet service design is not rocket science, it's extremely basics of the basics of any server security. In comparison, do you open your home's front door to strangers wandering on your street? Looks like this company has done so. Welcome to loot us!

Let me be very clear: closing giant security holes like shell access as root does not even require any special knowledge. It's just total ignorance and disrecpecting the nature of the data the company was keeping in their servers - extremely sensitive patient data. Moreover, understanding attacker methods against sensitive environments and motivations is very basic knowledge which is taught in penetration testing courses and in self-practiced penetration testing environments.

Which leads me to another topic that troubles me.

People do care about security & privacy but want practicability

In our every day digital life, why does security matter? How does it affect us all?

As our living environments get more and more digitalized, we must take measurements to protect information from unauthorized access and use. This is not only for keen nerds or geeks, but for everyone using any network-connected digital device. You can be whoever you are in your title and have high authority in your company or community. In the end, you have absolutely no authority for black hats. They do what is the most valuable motivation for them, and only for them.

Security is something that may be hard to see. It's your habits, your communication, everything you do. Like in the private psychiatric center security breach, people did ultimately trust the company, their security practices & measurements likely without blinking an eye. They did trust the company, they used company's services. That's what they did. They trusted the company.

Which companies can you trust your personal data to?

You handed your fingerprints over to a some private company? That's idenfitiable personal information, and in wrong hands, can cause a lot of damage. It is security based on your idenfitiable personal information. Did the company offer so much added value for their services you just ignored security and gave up? Still, how can you trust the information which should provide security is protected in secure manner itself? You ultimately trust the company's security policy, but can you truly do so? In the ultimate end, I may even call it kind of social engineering attack, which means a third party gains your trust by promising high-value services but ultimately, maybe purposely damages your direct interests in form of selling data, data leaks or security breaches.

You set up a game server for your friends? Does it encrypt the client-server traffic? No? Excellent, I see security hole. Did game developers know or care the security or was it just so much easier to implement insecure communications they just did that?

Do you trust a widely known grocery market company and give up your data to their mobile app to obtain more value for your company-related services or targeted advertising? Do you trust they have truly developed their infrastructure and software security and privacy in mind? How do you actually know? They gave their word? Right, well.

We trust others too much

I may say, for most people, security is an important matter but not as important than practicality or feature-rich services. People do care about security when it does not cause any trouble in use: People dislike typing passphrases, and in general they like security when they are not personally involved into it. People may want encryption but do not actually understand whether it does really takes place in application communications.

More complex our digital systems become to manage or use, or more configuration or features they involve, more difficult and less interesting security becomes to deal with. In information technology, security is very much technical aspects and deep practices. For many of us, security is still some vague principle, something you can hand over to hands of others who you truly trust: to your colleagues or companies with so-far good reputation or to commonly trusted brand.

Security becomes more vague when third parties directly communicate in a way by saying they have taken security seriously, or companies have highlighted and branded their security principle with fashinating logos of shiny shields titled with SECURITY or some other comparable terms. It's an easy trust: you are not assumed to have technical knowledge but still security has been granted to you. Easy deal? Well, can you trust them?

As far as I understand, they did textual security branding also on the private psychiatric center patient data leak case, at least in their security reports. And the people, even official authorities and security experts trusted. Well, you can see what happened. Let the results speak.

I see ignorance everywhere

I have friends and even family members who occasionally question, disapprove or reject my opinions, maybe even fanatism for security, no matter whether I talk about personal laptops or mobile phones, or physical environments. “Why are you so keen about this topic? What do you have to hide? Just enjoy services and give up your data like everyone else, you can't do nothing about it if you want to participate our activities. Just take off all your security because I don't care about your security policy at all since I just want get the fucking game/app to work, or I get mad!".

Hell, I see this attitude even in software development in some scale. One of the most critical part in software life cycle. It's like some project managers, stakeholders or developers prefer features and deployment speed and pay the price with poorly adapted security approaches and ignored best practices. Although, it may be in interest of some stakeholders to still demand security as granted without any extra effort assumed or resources/time/budget offered. Yes, security absolutely requires proper resourcing and continuous system/network auditing practices, you can hardly get around it. It may cost, but are you ready to take a risk to pay the ultimate price, similar to the case of the finnish psychiatric center patient data leak? Can you be proud in the end?

You are the user and you are personally responsible of your security & privacy

In server environments, server administrators, their supervisors, security teams and organization top decision makers are responsible for supporting and considering proper security measurements. Organization policy towards security and time pressures play a major role, as well.

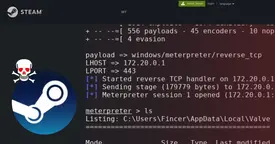

Security should concern everyone. In client environments, users are also responsible, as malicious backdoor programs can find their way into personal laptops or mobile phones. You may check my practically proven post about that: Malicious trojan for Steam installer.

In the end, you are the user. If you don't care, who does it for you? In your personal devices, do you care more about features, free apps or ease of use? Well, let me say: features and free apps you so much like tend to fetch your personal data without your knowledge (“app is not the product, you are the product” cliche), increase potential attack surface in your devices (“more ways for hackers to access your devices”), ease of use is synonym to no or little security in many cases. Open source or closed source? Do you trust the vendor? Do you truly trust your friend's copy of a program?

Yes, and at this moment, many people say fuck that: too many questions, too compilated and in the end, they ignore security. Until it is discovered that, for years, ignoring it has caused serious damage personally to you or your close ones, out from your control, based on leaked out information. And in that moment, yet you demand more security and heads on the platter. Who is responsible? You talk about GDPR and demand your rights as an EU citizen! Well, some companies do not give a fuck, especially ones outside European Union. How do you protect your rights when the damage has already happened? Do these companies actually delete your data or just fake it? Can you trust them? I don't like to say it but: it is you, your blind trust and your habits which cause the root trouble. Or if you actually care about security, your habits are the solution for many security issues. You must just pick your side by adapting your behavior. It may not be easy, I don't say it's easy at all. It likely has a price such as making your life little bit more cumbersome in some scale.

For this very short moment, this security panic movement and awakening is happening in national scale in Finland. However, when a month or more passes by, media stops talking about the patient record hack, and people hardly remember the topic. Still, cybersecurity reality does not simply vanish when media stops talking about it. It only vanishes from your world if you stop using networked digital devices. Since it is not a real option in our digitalized world, security lives its own life in every moment you use your devices. Do a single, serious mistake with your device, get spoofed or something else and you may make a backdoor and persistence for another black hat hacker.

Security in network environments and software projects

If you run physical network infrastructure yourself, you may need to invest to professional IDS/IPS/Firewall systems combined with other best security practices you find suitable. Security practices still apply if you have a VPS instance somewhere.

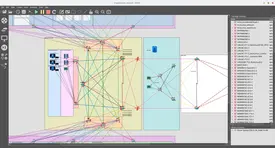

Dummy systems do not alone protect you or your data. You need to configure them, too. Look at the compherensive documentation, such as OWASP cheat sheets, official state authority security documentation (Finland: KATAKRI (Finnish National Security Auditing Criteria), PiTuKri (Criteria to Assess the Information Security of Cloud Services), etc.) and publicly available penetration testing reports. Always practice principle of least privilege. Use proper testing software. Do penetration testing. Take a look at your network topology mapping. Isolate critical networks and software/servers using it. Use and check logs. Consider something such as ElasticSearch log monitoring system.

All in all, it's your practices, critical way of thinking, questioning your current security solutions, looking at the big picture, testing security policy in practice, continuously auditing data leaks and network activities and taking proper countermeasures, trust of key people responsible for implementing security solutions. Establish proper security routines. On the other side of the coin, your non-technical colleagues likely do not want to take extra security-wise steps for using their work-related devices or services. In the end, they demand practicability but also expect security.

In software projects, security must be taken into consideration. If you are familiar with OSI model, security must cover it all: from physical environments through network-related layers ending ultimately to applications themselves. OSI model acknowledges 7 layers. I may add another layer on the top of it: user layer. After all, people use software. Software may have a system user, especially server daemons/services. You run server software as root (Linux/Mac OS) or SYSTEM (Windows) user? Great, unless air-gapped or tightly isolated otherwise, you have a critical security issue. Please, isolate the process and/or system, create an isolated system user and possibly a chrooted environment for it.

In projects, some of your stakeholders do trust your security solutions but may not understand what it actually takes, or why does adapting an application solution may take another extra week. Again, they take it for granted. They trust your security by default but may not expect it to delay anything in a project's schedule or do not want to invest to it because in the end, they do not understand, do not want to understand it or care about it until it's too late. Other priorities matter so much more, such as scalability with Docker containers and Kubernetes (I acknowledge these are extremely important matters, too). I say, not all stakeholders or clients act like that, actually they may demand proper security reports, or practices to be followed (as security-wise/good clients do). However, let me explain one point of view: when implementing your software solution in secure manner, all security layers must be considered. Where does your data gets stored? Can Docker containers we use ultimately be trusted? Is communications encrypted? Is the network/server environment trusted? What can happen if someone gets unauthorized access? Someone exploits a security hole in application logic? Someone is able to perform a denial of service attack to the back-end server system? Well, when evaluating all these questions, your project may take another week extra time. Do not wonder anymore.

IT guys are people, too

Developers create new software, they write the actual code our devices use. Some developers are more advanced than others, developers have different motivations, some act maliciously, some take privacy seriously. Developers are people too, they make mistakes.

System administrators actually configure server security policy and server software, network architects may design it. Do they understand all security measurements? Do they think potential attack scenarios? Do they perform penetration testing, directly attacking their own systems to test the practical security? Do they set up the proper authentication, authorization and auditing policies and test they actually work as expected? Administrators can also make mistakes, such as misleadingly believing some server configuration should work in a way they think when it actually does not, and eventually they do not test the configuration works as expected.

Personal aspects

I have developed in Java, C, C++ and Python. I have made mistakes, created bugs and fixed them. Well, can you still trust my code? It depends whether I care about testing security aspects of my code and practically take them into consideration. Whether I have interest to sell or not to sell your personal data or use it in harmful ways. Do I write even partially malicious code and target clients with it? Consider these aspects when pondering the trust issues towards every company's fancy app, individual developers or developer teams.

To say, I don't sell any personal data, nor write malicious code on purpose. Even once I backdoored a friend's Linux computer as root (with permission) but I value a mutual trust so much over everything so I neither looked at his personal files nor deployed any malicious data. I just did what I was asked for (setting up a WLAN hotspot on a Linux host), nothing else, and exited. Communicated and asked for friend to do several tests during my backdoor access. After all, I told the friend to destroy the backdoor stuff, and gave full and honest instructions for doing that. In the end, my main motivation and intention was not to destroy the mutual trust. We are still good friends, and nothing bad has happened in his digital security. Black hats do not operate that way. And yes, as these activities are malicious in nature, they always require proper agreement and permission from the target client. No exceptions. Unless you want to be a black hat yourself and ready to face the consequences if you got caught.

About Fjordtek.com

I have designed, built and configured everything of it: network infrastructure required to run this site, deployed all server hardware & software components and its security policy. Authentication, authorization and auditing policy. Fine-tuned website back-end code. Additionally, I have proper network segmentation and security measurements. Or do I have? This is what I think almost in daily basis. I think and test potential attack scenarios. For me, with multiple variables around, security is somewhat hard but not impossible to manage. I simply can't blindly take security for granted. Even if I trust my security policy, I must never trust it at 100%. If someone does, that someone has mistakenly & seriously wrong attitude.

Conclusions

In more digitalized world, it is so difficult to say to who you can trust. In all roots, companies and communities have different policies, priorizities, organizational models, hierarchies, values, people working in and whatever.

So, what to do? It's all about trust, eyes watching, transparency - and something in your personal space: it culminates to your needs you satisty with all the applications you use and third-party dependent or associated personal needs you satisfy in your every day life.

Why does security matter?

Why does security matter?